-

PDF

- Split View

-

Views

-

Cite

Cite

Florian Berg, Julian F Kölbel, Roberto Rigobon, Aggregate Confusion: The Divergence of ESG Ratings, Review of Finance, Volume 26, Issue 6, November 2022, Pages 1315–1344, https://doi.org/10.1093/rof/rfac033

Close - Share Icon Share

Abstract

This paper investigates the divergence of environmental, social, and governance (ESG) ratings based on data from six prominent ESG rating agencies: Kinder, Lydenberg, and Domini (KLD), Sustainalytics, Moody’s ESG (Vigeo-Eiris), S&P Global (RobecoSAM), Refinitiv (Asset4), and MSCI. We document the rating divergence and map the different methodologies onto a common taxonomy of categories. Using this taxonomy, we decompose the divergence into contributions of scope, measurement, and weight. Measurement contributes 56% of the divergence, scope 38%, and weight 6%. Further analyzing the reasons for measurement divergence, we detect a rater effect where a rater’s overall view of a firm influences the measurement of specific categories. The results call for greater attention to how the data underlying ESG ratings are generated.

1. Introduction

Environmental, social, and governance (ESG) rating providers1 have become influential institutions. A total of 3,038 investors representing over $100 trillion in combined assets have signed a commitment to integrate ESG information into their investment decisions (PRI, 2020). Sustainable investing is growing quickly and mutual funds that invest according to ESG ratings experience sizable inflows (Hartzmark and Sussman, 2019). Due to these trends, more and more investors rely on ESG ratings to obtain a third-party assessment of corporations’ ESG performance. A growing number of academic studies rely on ESG ratings for their empirical analysis (see, e.g., Servaes and Tamayo, 2013; Flammer, 2015; Liang and Renneboog, 2017; Lins, Servaes, and Tamayo, 2017; Albuquerque, Koskinen, and Zhang, 2019). As a result, ESG ratings increasingly influence decisions, with potentially far-reaching effects on asset prices and corporate policies.

However, ESG ratings from different providers disagree substantially, as previously shown in Chatterji et al. (2016). We confirm this finding in our data set, where the correlations between ESG ratings range from 0.38 to 0.71. This is based on ESG ratings from six different raters: KLD, Sustainalytics, Moody’s ESG (previously Vigeo-Eiris), S&P Global (previously RobecoSAM), Refinitiv (previously Asset4), and MSCI. This disagreement has several important consequences. First, it makes it difficult to evaluate the ESG performance of companies, funds, and portfolios, which is the primary purpose of ESG ratings. Second, ESG rating divergence decreases companies’ incentives to improve their ESG performance. Companies receive mixed signals from rating agencies about which actions are expected and will be valued by the market. This might lead to underinvestment in ESG improvement activities ex ante. Third, markets are less likely to price firms’ ESG performance ex post. ESG performance may be fundamentally value-relevant or affect asset prices through investor tastes (Heinkel, Kraus, and Zechner, 2001). However, in both cases, the divergence of the ratings disperses the effect of ESG performance on asset prices. Fourth, the disagreement shows that it is difficult to link CEO compensation to ESG performance. Contracts are likely to be incomplete and CEOs may optimize for one particular rating while underperforming in other important ESG issues—that is, CEOs might “hit the target” set by the rating but “miss the point” of improving the firm’s ESG performance more broadly. Finally, the divergence of ratings poses a challenge for empirical research, as using one rater versus another may alter a study’s results and conclusions. The divergence of ESG ratings introduces uncertainty into any decision taken based on ESG ratings and, therefore, represents a challenge for a wide range of decision-makers.

This paper investigates what drives the divergence of sustainability ratings. Chatterji et al. (2016) have taken an important first step in this regard, providing two reasons for the divergence: What ESG raters choose to measure, and whether it is measured consistently, which the authors term “theorization” and “commensurability.” In their empirical analysis, the authors show that both differences in theorization and low commensurability play a role. However, their analysis leaves open to what extent each of these components drives divergence. As a result, it remains unclear whether a better articulation of what is measured could resolve the divergence or whether measurement itself is the central problem. A key reason for this remaining gap is that Chatterji et al. (2016) rely on a data set that contains only a small subset of the underlying indicators that make up the different ESG ratings. To advance on this front, this paper provides a quantitative decomposition of ESG rating divergence, relying on six ESG ratings along with the complete set of 709 underlying indicators.

We identify three distinct sources of divergence. “Scope divergence” refers to the situation where ratings are based on different sets of attributes. One rating agency may include lobbying activities, while another might not, causing the two ratings to diverge. “Measurement divergence” refers to a situation where rating agencies measure the same attribute using different indicators. For example, a firm’s labor practices could be evaluated on the basis of workforce turnover or by the number of labor-related court cases taken against the firm. Finally, “weight divergence” emerges when rating agencies take different views on the relative importance of attributes.2 For example, the labor practices indicator may enter the final rating with greater weight than the lobbying indicator. The contributions of scope, measurement, and weight divergence are intertwined, making it difficult to interpret the difference between two ESG ratings.

We approach the problem in three steps. First, we categorize all 709 indicators provided by the different data providers into a common taxonomy of sixty-four categories. This categorization is a critical step in our methodology, as it allows us to observe the scope of categories covered by each rating and to contrast measurements by different raters within the same category. We create a category whenever at least two indicators from different rating agencies pertain to the same attribute. Based on the taxonomy, we calculate rater-specific category scores by averaging indicators that were assigned to the same category. Second, we regress the original rating on those category scores. The regression models yield fitted versions of the original ratings and we can compare these fitted ratings to each other. Third, we decompose the divergence into the contributions of scope, measurement, and weight.

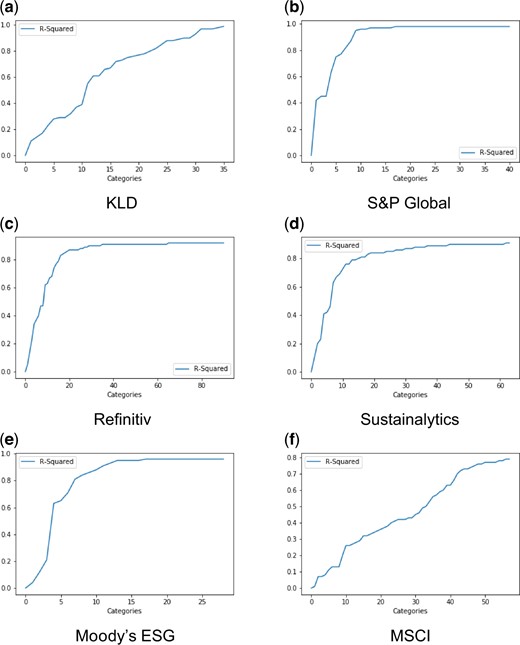

Our study yields three results. First, we show that it is possible to estimate the implied aggregation rule used by the rating agencies with an accuracy of 79–99% based on our common taxonomy. This demonstrates that although ESG ratings have incompatible structures, it is possible to fit them into a consistent framework that reveals in detail how much and for what reason ratings differ. Second, we find that measurement divergence is the main driver of rating divergence, contributing 56% of the divergence. Scope divergence is also important, contributing 38%, while weight divergence contributes a mere 6%. Third, we find that measurement divergence is in part driven by a “rater effect.” This is also known as the “halo effect,” meaning that a firm receiving a high score in one category is more likely to receive high scores in all the other categories from that same rater. The rater effect is substantial. Controlling for which firm is rated and in which category the firm is rated, the rater effect explains 15% of the variation of category scores.

We perform several robustness checks. First, we evaluate the sensitivity of the results to our taxonomy. The taxonomy is an approximation because most raters do not share their raw data, making it impossible to match indicators with the same units. However, restricting the analysis to perfectly identical indicators would yield that the divergence is entirely due to scope—that is, that there is zero common ground between ESG raters—which does not reflect the real situation. Thus, we use a taxonomy that matches indicators by attribute. To rule out that our subjective judgment drives the results, we sort the indicators according to an alternative taxonomy provided by the Sustainability Accounting Standards Board (SASB).3 The results based on this alternative taxonomy are consistent with those based on our own taxonomy. Second, our linear aggregation rule is not industry-specific, while most ESG rating agencies use industry-specific aggregation rules. However, this approximation seems to be relatively innocuous because a simple linear rule achieves a very high quality of fit. More sophisticated non-linear estimators, such as neural networks, do not yield better results. Third, our main analysis is based on the year 2014, which maximizes our sample size and includes KLD as one of the ratings that has been used in academia most frequently so far. However, replicating the analysis for the year 2017 without KLD yields very similar results. Fourth, we present a regression-based decomposition method as an alternative methodology, which also supports our results.

We extend existing research that has investigated the divergence of ESG ratings (Chatterji et al., 2016; Gibson Brandon, Krueger, and Schmidt, 2021; Christensen, Serafeim, and Sikochi, 2022). Our first contribution to this literature is to quantify the drivers of divergence. Our results show that ESG rating divergence is primarily driven by measurement divergence and it is, for that reason, difficult to resolve. The easiest issue to address is weight divergence. Two ratings could be made consistent by aligning their weighting schemes. However, because weight divergence contributes only 6% to the total divergence, adjusting weights will achieve little. Scope divergence is much more important but harder to address. Scope divergence implies that categories are measured exclusively by one rater. Thus, one can only achieve more agreement by concentrating the ESG assessment on a reduced set of common categories. Nevertheless, measurement divergence remains the most relevant driver of divergence even within this smaller set. Thus, addressing ESG rating divergence requires one to understand how the data that underpin ESG ratings are generated. Our second contribution is a methodology that facilitates dealing with ESG rating divergence. At the firm level, it allows tracing divergence to individual categories. At the aggregate level, it allows identifying the categories in which measurement divergence is most consequential, providing priority areas for future research. Our third contribution is the discovery of a rater effect. This suggests that measurement divergence is not randomly distributed noise but follows rater- and firm-specific patterns. These patterns suggest structural reasons for measurement divergence, such as how rating agencies organize their work.

These results have important implications for future research in sustainable finance. ESG ratings and metrics are an important foundation for the field of sustainable finance. Theory predicts that investor preferences for ESG affect asset prices. In practice, however, investment choices are guided by ESG ratings, making the construction of and disagreement among ESG ratings a central concern. Therefore, future research should attempt to improve the empirical basis of sustainable finance. Researchers should vet data providers carefully and avoid relying too much on one single rater as a community. However, it is not sufficient to consider multiple ratings. The disagreement extends to specific environment, social, and governance categories, meaning that noisy measurement also poses a challenge for research on ESG metrics such as carbon emissions or gender equality. To address this, researchers should invest in developing their own category-specific metrics and ideally make them available to others. In addition, the rater effect raises questions about the economics of the ESG ratings market. Structural reasons or incentives that need to be better understood may influence how certain companies or categories are rated. Beyond improving measurement, the divergence itself begs the question of how uncertainty in ESG ratings affects asset prices, a topic that is gaining attention in the literature (Avramov et al., 2021; Gibson Brandon, Krueger, and Schmidt, 2021). Finally, our results raise the question of how companies respond to being scored differently by different raters (see also Chatterji, Levine, and Toffel, 2009), which will inform the effects of sustainable finance on the real economy.

ESG rating divergence does not imply that measuring ESG performance is a futile exercise. However, it highlights that measuring ESG performance is challenging, that attention to the underlying data are essential, and that the use of ESG ratings and metrics must be carefully considered for each application. Investors can use our methodology to reconcile divergent ratings and focus their research on those categories where ratings disagree. For regulators, our study points to the potential benefits of harmonizing ESG disclosure and establishing a taxonomy of ESG categories. Harmonizing ESG disclosure would help provide a foundation of reliable data. A taxonomy of ESG categories would make it easier to contrast and compare ratings.

The paper is organized as follows: Section 2 describes the data; Section 3 documents the ESG rating divergence; and Section 4 explains the taxonomy and how we estimate the aggregation procedures. In Section 5, we decompose the overall divergence into the contributions of “scope,” “measurement,” and “weight” and Section 6 explores the rater effect. We conclude in Section 7 and highlight the implications of our findings.

2. Data

ESG ratings first emerged in the 1980s as a way for investors to screen companies on environmental, social, and corporate governance performance. The earliest ESG rating agency, Eiris (merged with Vigeo in 2015), was established in 1983 in France, and 7 years later, Kinder, Lydenberg, and Domini (KLD) was established in the USA. While initially catering to a highly specialized investor clientele, including faith-based organizations, the market for ESG ratings has widened dramatically, especially in the past decade. Because ESG ratings are an essential basis for most kinds of sustainable investing, the market for ESG ratings grew in parallel to sustainable investing. As sustainable investing transitioned from niche to mainstream, many early ESG rating providers were acquired by established financial data providers. For example, MSCI bought KLD in 2010, Morningstar acquired 40% of Sustainalytics in 2017, Moody’s bought Vigeo-Eiris in 2019, and S&P Global bought RobecoSAM in 2019.

ESG rating agencies allow investors to screen companies for ESG performance, like credit ratings allow investors to screen companies for creditworthiness. However, at least three important differences between ESG ratings and credit ratings exist. First, while creditworthiness is relatively clearly defined as the probability of default, the definition of ESG performance is less clear. It is a concept based on values that are diverse and evolving. Thus, an important part of the service that ESG rating agencies offer is an interpretation of what ESG performance means. Second, while financial reporting standards have matured and converged over the past century, ESG reporting is in its infancy. There are competing reporting standards for ESG disclosure, many of which are voluntary or limited to single jurisdictions, giving corporations broad discretion regarding whether and what to report. Thus, ESG ratings provide a service to investors by collecting and aggregating information from across a spectrum of sources and reporting standards. These two differences explain why the divergence between ESG ratings is so much more pronounced than the divergence between credit ratings, the latter being correlated at 99%.4 Third, ESG raters are paid by the investors who use the ratings, not by the companies that are rated, as is the case with credit raters. As a result, the problem of ratings shopping, which has been discussed as a potential reason for credit ratings diverging (see, e.g., Bongaerts, Cremers, and Goetzmann, 2012), does not apply to ESG rating providers.

We use data from six different ESG rating providers: KLD5, Sustainalytics, Moody’s ESG, Refinitiv, MSCI, and S&P Global. We include KLD because it is the data set that has been used most frequently in academic studies. Our selection of the other raters was guided by market relevance. All the providers in our sample are widely recognized and used by sustainable finance professionals.6 We approached each provider and requested access to the ratings, the underlying indicators, and documentation about the aggregation rules and measurement protocols of the indicators.

Table I provides descriptive statistics of the aggregate ratings7 and their sample characteristics. The baseline year for our analysis is 2014, which is the year with the largest common sample when KLD is also included. Because most of the academic literature to date relies on KLD data, it is important to include it in our study. We also confirm our results for the year 2017 without KLD. Panel A shows the full sample, where the number of firms ranges from 1,665 to 9,662. Panel B shows the common sample of 924 firms. The mean and median ESG ratings are higher in the common sample for all providers, indicating that the balanced sample tends to drop lower-performing companies. For our further analysis, we normalize the common sample to have zero mean and unit variance in the cross-section.

Descriptive statistics

Descriptive statistics of the aggregate ratings (ESG level) in 2014 for the six rating agencies. Panel A shows the data for the full sample and Panel B for the common sample.

| Panel A: Full sample . | ||||||

|---|---|---|---|---|---|---|

| . | Sustainalytics . | S&P Global . | Moody’s ESG . | KLD . | Refinitiv . | MSCI . |

| Firms | 4,531 | 1,665 | 2,304 | 5,053 | 4,013 | 9,662 |

| Mean | 56.4 | 47.19 | 32.23 | 1.16 | 50.9 | 4.7 |

| Standard Dev. | 9.46 | 21.06 | 11.78 | 1.76 | 30.94 | 1.19 |

| Minimum | 29 | 13 | 5 | 6 | 2.78 | 0 |

| Median | 55 | 40 | 31 | 1 | 53.15 | 4.7 |

| Maximum | 89 | 94 | 67 | 12 | 97.11 | 9.8 |

Panel B: Common sample | ||||||

| Firms | 924 | 924 | 924 | 924 | 924 | 924 |

| Mean | 61.86 | 50.49 | 34.73 | 2.56 | 73.47 | 5.18 |

| Standard Dev. | 9.41 | 20.78 | 11.31 | 2.33 | 23.09 | 1.22 |

| Minimum | 37 | 14 | 6 | 4 | 3.46 | 0.6 |

| Median | 62 | 47 | 33 | 2 | 81.48 | 5.1 |

| Maximum | 89 | 94 | 66 | 12 | 97.11 | 9.8 |

| Panel A: Full sample . | ||||||

|---|---|---|---|---|---|---|

| . | Sustainalytics . | S&P Global . | Moody’s ESG . | KLD . | Refinitiv . | MSCI . |

| Firms | 4,531 | 1,665 | 2,304 | 5,053 | 4,013 | 9,662 |

| Mean | 56.4 | 47.19 | 32.23 | 1.16 | 50.9 | 4.7 |

| Standard Dev. | 9.46 | 21.06 | 11.78 | 1.76 | 30.94 | 1.19 |

| Minimum | 29 | 13 | 5 | 6 | 2.78 | 0 |

| Median | 55 | 40 | 31 | 1 | 53.15 | 4.7 |

| Maximum | 89 | 94 | 67 | 12 | 97.11 | 9.8 |

Panel B: Common sample | ||||||

| Firms | 924 | 924 | 924 | 924 | 924 | 924 |

| Mean | 61.86 | 50.49 | 34.73 | 2.56 | 73.47 | 5.18 |

| Standard Dev. | 9.41 | 20.78 | 11.31 | 2.33 | 23.09 | 1.22 |

| Minimum | 37 | 14 | 6 | 4 | 3.46 | 0.6 |

| Median | 62 | 47 | 33 | 2 | 81.48 | 5.1 |

| Maximum | 89 | 94 | 66 | 12 | 97.11 | 9.8 |

Descriptive statistics

Descriptive statistics of the aggregate ratings (ESG level) in 2014 for the six rating agencies. Panel A shows the data for the full sample and Panel B for the common sample.

| Panel A: Full sample . | ||||||

|---|---|---|---|---|---|---|

| . | Sustainalytics . | S&P Global . | Moody’s ESG . | KLD . | Refinitiv . | MSCI . |

| Firms | 4,531 | 1,665 | 2,304 | 5,053 | 4,013 | 9,662 |

| Mean | 56.4 | 47.19 | 32.23 | 1.16 | 50.9 | 4.7 |

| Standard Dev. | 9.46 | 21.06 | 11.78 | 1.76 | 30.94 | 1.19 |

| Minimum | 29 | 13 | 5 | 6 | 2.78 | 0 |

| Median | 55 | 40 | 31 | 1 | 53.15 | 4.7 |

| Maximum | 89 | 94 | 67 | 12 | 97.11 | 9.8 |

Panel B: Common sample | ||||||

| Firms | 924 | 924 | 924 | 924 | 924 | 924 |

| Mean | 61.86 | 50.49 | 34.73 | 2.56 | 73.47 | 5.18 |

| Standard Dev. | 9.41 | 20.78 | 11.31 | 2.33 | 23.09 | 1.22 |

| Minimum | 37 | 14 | 6 | 4 | 3.46 | 0.6 |

| Median | 62 | 47 | 33 | 2 | 81.48 | 5.1 |

| Maximum | 89 | 94 | 66 | 12 | 97.11 | 9.8 |

| Panel A: Full sample . | ||||||

|---|---|---|---|---|---|---|

| . | Sustainalytics . | S&P Global . | Moody’s ESG . | KLD . | Refinitiv . | MSCI . |

| Firms | 4,531 | 1,665 | 2,304 | 5,053 | 4,013 | 9,662 |

| Mean | 56.4 | 47.19 | 32.23 | 1.16 | 50.9 | 4.7 |

| Standard Dev. | 9.46 | 21.06 | 11.78 | 1.76 | 30.94 | 1.19 |

| Minimum | 29 | 13 | 5 | 6 | 2.78 | 0 |

| Median | 55 | 40 | 31 | 1 | 53.15 | 4.7 |

| Maximum | 89 | 94 | 67 | 12 | 97.11 | 9.8 |

Panel B: Common sample | ||||||

| Firms | 924 | 924 | 924 | 924 | 924 | 924 |

| Mean | 61.86 | 50.49 | 34.73 | 2.56 | 73.47 | 5.18 |

| Standard Dev. | 9.41 | 20.78 | 11.31 | 2.33 | 23.09 | 1.22 |

| Minimum | 37 | 14 | 6 | 4 | 3.46 | 0.6 |

| Median | 62 | 47 | 33 | 2 | 81.48 | 5.1 |

| Maximum | 89 | 94 | 66 | 12 | 97.11 | 9.8 |

3. Divergence

Our point of departure is that ESG ratings diverge. In this section, we establish that the divergence in our sample is substantial and consistent with prior studies. First, we compute Krippendorff’s alpha (Krippendorff, 2004). The advantage of this measure is that it expresses the overall reliability of assessment for any number of raters in one statistic. For the 6 raters and 924 firms in our sample, we obtain a value of 0.55. In general, values above 0.8 are preferred and values above 0.667 are considered a minimum to arrive at tentative conclusions about the true value based on raters’ assessments (Krippendorff, 2004, p. 204). In other words, the disagreement is substantial.

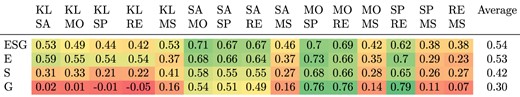

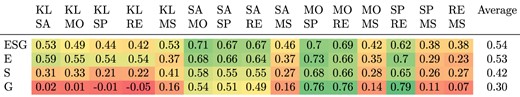

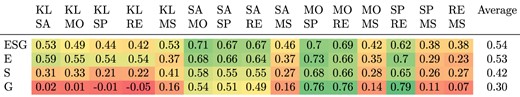

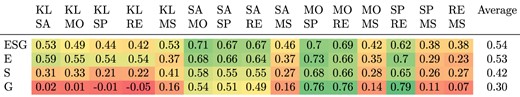

Table II shows pairwise Pearson correlations between the aggregate ESG ratings and between their environmental (E), social (S), and governance (G) dimensions. Correlations at the ESG level are on average 0.54 and range from 0.38 to 0.71. Sustainalytics and Moody’s ESG have the highest level of agreement with each other, with a correlation of 0.71. The environmental dimension has the highest correlation of the three dimensions, with an average of 0.53. The social dimension has an average correlation of 0.42, and the governance dimension has the lowest correlation, with an average of 0.30. KLD and MSCI exhibit the lowest correlations with other raters, both for the aggregate ESG rating and individual dimensions. Considering sampling differences, these results are consistent with ESG rating correlations reported by Chatterji et al. (2016) and Gibson Brandon, Krueger, and Schmidt (2021).

Correlations between ESG ratings

Correlations between ESG ratings at the aggregate rating level (ESG) and at the level of the environmental dimension (E), the social dimension (S), and the governance dimension (G) using the common sample. The results are similar using pairwise common samples based on the full sample. SA, SP, MO, RE, KL, and MS are short for Sustainalytics, S&P Global, Moody’s ESG, Refinitiv, KLD, and MSCI, respectively.

|

|

Correlations between ESG ratings

Correlations between ESG ratings at the aggregate rating level (ESG) and at the level of the environmental dimension (E), the social dimension (S), and the governance dimension (G) using the common sample. The results are similar using pairwise common samples based on the full sample. SA, SP, MO, RE, KL, and MS are short for Sustainalytics, S&P Global, Moody’s ESG, Refinitiv, KLD, and MSCI, respectively.

|

|

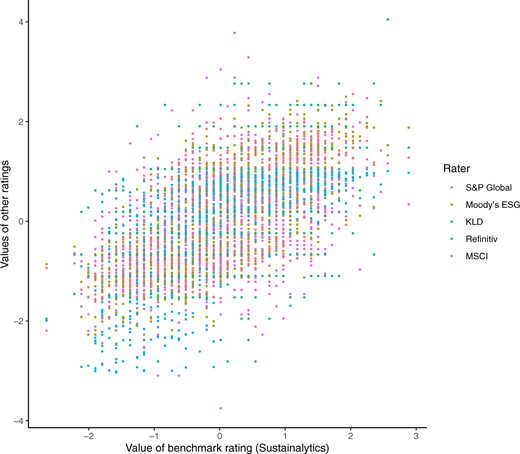

We illustrate the rating divergence in Figure 1. Not to overstate the problem, we use the Sustainalytics rating, which has the highest correlations with the five other ratings, as a benchmark. We plot the values from the other raters against this benchmark rating. Figure 1 illustrates that ESG ratings are positively correlated. As the benchmark rating increases, the other ratings also tend to increase. Nevertheless, the figure also shows substantial divergence. For any level of the benchmark rating, there is a wide range of values given by the other ratings. Suppose a company receives a Sustainalytics rating score of +1.5, which places it among the top 10% of companies rated by Sustainalytics. Yet, other ratings at x = 1.5 score the company below zero, placing the company below the sample average. In other words, the extent of the divergence is such that it is difficult to tell a leader from an average performer. This issue becomes even more pronounced when using other ratings as a benchmark or when looking at rankings. For an illustration, refer to Online Appendix Figure A.1.

ESG rating disagreement.

Notes: This graph illustrates the ESG rating divergence. The horizontal axis indicates the value of the Sustainalytics rating as a benchmark for each firm (n = 924). Rating values by the other five raters are plotted on the vertical axis in different colors. For each rater, the distribution of values has been normalized to zero mean and unit variance. The Sustainalytics rating has discrete values that show up visually as vertical lines where several companies have the same rating value.

The purpose of ESG ratings is to assess a company’s ESG performance. Yet, ESG ratings disagree to an extent that leaves observers with considerable uncertainty as to how good the company’s ESG performance is. It is natural for those interested in ESG performance to wonder what causes ESG ratings to disagree so widely. This is what we investigate next.

4. Scope, Measurement, and Weights

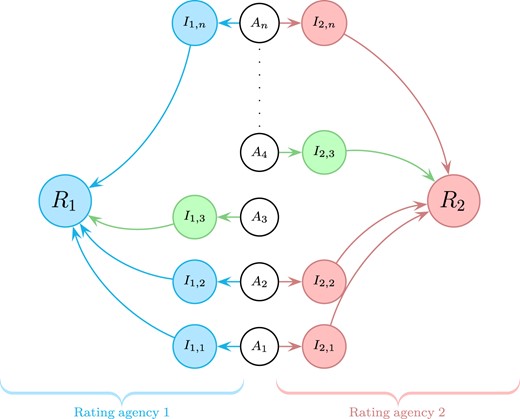

In this section, we explain how we specify ESG ratings in terms of scope, measurement, and weight based on a common taxonomy. This framework, illustrated in Figure 2, allows us to explain why ratings diverge.

The sources of divergence.

Notes: Our schematic representation of an ESG rating consists of the elements scope, measurement, and weight. Scope is the set of attributes An that describe a company’s ESG performance. Measurement determines the indicators … , which produce numerical values for each attribute and are specific to rating agency k. Weights determine how indicators are aggregated into a single ESG rating Rk. Scope divergence results from two raters considering a different set of attributes. Measurement divergence results from two raters using different indicators to measure the same attribute. Weight divergence results from two raters aggregating the same indicators using different weights.

4.1 Scope

The decomposition of ESG rating divergence is not trivial because at the granular level, the structures of different ESG ratings are incompatible. Each rater chooses to break down the concept of ESG performance into different indicators and organizes them in different hierarchies. For example, at the first level of disaggregation, Moody’s ESG, S&P Global, MSCI, and Sustainalytics have three dimensions (E, S, and G), Refinitiv has four, and KLD has seven. Below these first-level dimensions, there are between one and three levels of more granular sub-categories, depending on the rater. At the lowest level, our data set contains between 38 and 282 indicators per rater, which often, but not always, relate to similar underlying attributes. These incompatible structures make it difficult to understand how and why different raters assess the same company in different ways.

We impose our own taxonomy on the data to perform a meaningful comparison of these different rating systems. We develop this taxonomy using a bottom-up approach. First, we create a long list of all available indicators and their detailed descriptions. In cases where the descriptions were not available (or were insufficient), we interviewed the data providers for clarification. In total, the list contains 709 indicators. Second, we group indicators that describe the same attribute in the same “category.” For example, we group all indicators related to the category Water, as shown in Table III. Third, we iteratively refine the taxonomy, following two rules: (a) each indicator is assigned to only one category and (b) a new category is established when at least two indicators from different raters both describe an attribute that is not yet covered by existing categories. For example, indicators related to Forests were taken out of the larger category of Biodiversity to form their own category. The classification is purely based on the attribute that indicators intend to measure, regardless of the method or data source used. Indicators that are unique to one rater and could not be grouped with indicators from other raters were labeled “unclassified” and each given their own rater-specific category.

Example of indicator assignment

This table shows the indicators from different rating agencies assigned to the category Water.

| Rater . | Indicator name . | Category . |

|---|---|---|

| Refinitiv | Emission Reduction/Discharge into Water System | Water |

| Refinitiv | Resource Reduction/Water Recycling | Water |

| Refinitiv | Resource Reduction/Water Use | Water |

| KLD | ENV.CON.Water Management | Water |

| KLD | ENV.STR.Water Stress | Water |

| MSCI | Water Stress Mgmt | Water |

| S&P Global | Water Operations | Water |

| S&P Global | Water Related Risks | Water |

| Sustainalytics | Water Intensity-Raw Score | Water |

| Sustainalytics | Water Management Programmes-Raw Score | Water |

| Moody’s ESG | Water | Water |

| Rater . | Indicator name . | Category . |

|---|---|---|

| Refinitiv | Emission Reduction/Discharge into Water System | Water |

| Refinitiv | Resource Reduction/Water Recycling | Water |

| Refinitiv | Resource Reduction/Water Use | Water |

| KLD | ENV.CON.Water Management | Water |

| KLD | ENV.STR.Water Stress | Water |

| MSCI | Water Stress Mgmt | Water |

| S&P Global | Water Operations | Water |

| S&P Global | Water Related Risks | Water |

| Sustainalytics | Water Intensity-Raw Score | Water |

| Sustainalytics | Water Management Programmes-Raw Score | Water |

| Moody’s ESG | Water | Water |

Example of indicator assignment

This table shows the indicators from different rating agencies assigned to the category Water.

| Rater . | Indicator name . | Category . |

|---|---|---|

| Refinitiv | Emission Reduction/Discharge into Water System | Water |

| Refinitiv | Resource Reduction/Water Recycling | Water |

| Refinitiv | Resource Reduction/Water Use | Water |

| KLD | ENV.CON.Water Management | Water |

| KLD | ENV.STR.Water Stress | Water |

| MSCI | Water Stress Mgmt | Water |

| S&P Global | Water Operations | Water |

| S&P Global | Water Related Risks | Water |

| Sustainalytics | Water Intensity-Raw Score | Water |

| Sustainalytics | Water Management Programmes-Raw Score | Water |

| Moody’s ESG | Water | Water |

| Rater . | Indicator name . | Category . |

|---|---|---|

| Refinitiv | Emission Reduction/Discharge into Water System | Water |

| Refinitiv | Resource Reduction/Water Recycling | Water |

| Refinitiv | Resource Reduction/Water Use | Water |

| KLD | ENV.CON.Water Management | Water |

| KLD | ENV.STR.Water Stress | Water |

| MSCI | Water Stress Mgmt | Water |

| S&P Global | Water Operations | Water |

| S&P Global | Water Related Risks | Water |

| Sustainalytics | Water Intensity-Raw Score | Water |

| Sustainalytics | Water Management Programmes-Raw Score | Water |

| Moody’s ESG | Water | Water |

The resulting taxonomy, shown in Table IV, assigns the 709 indicators to a total of sixty-four distinct categories. Refinitiv has the most individual indicators with 282, followed by Sustainalytics with 163. KLD, S&P Global, and MSCI have seventy-eight, eighty, and sixty-eight, respectively, and Moody’s ESG has thirty-eight. Some categories—Forests, for example—contain only one indicator from two raters. Others, such as Supply Chain, contain several indicators from all raters. Arguably, Forests is a much narrower category than Supply Chain. The reason for this difference in broadness is that there were no indicators in Supply Chain that together represented a more narrow common category. Therefore, the Supply Chain comparison is at a more general level and it may seem obvious that different raters take a different view of this category. Nevertheless, this broad comparison represents the most specific level possible given the data.

Number of indicators per rater and category

This table shows how many indicators are provided by the different rating agencies per category. Categories that are covered by all raters are printed in bold.

| Category . | Sustainalytics . | S&P Global . | Refinitiv . | Moody’s ESG . | MSCI . | KLD . |

|---|---|---|---|---|---|---|

| Access to Basic Services | 2 | 1 | 1 | 1 | ||

| Access to Healthcare | 6 | 3 | 1 | 1 | 1 | |

| Animal Welfare | 2 | 1 | ||||

| Anti-competitive Practices | 2 | 1 | 1 | 1 | ||

| Audit | 4 | 5 | 1 | |||

| Biodiversity | 1 | 1 | 3 | 1 | 1 | 2 |

| Board | 6 | 25 | 1 | 1 | ||

| Board Diversity | 2 | 1 | 3 | |||

| Business Ethics | 4 | 2 | 1 | 1 | 1 | |

| Chairperson-CEO Separation | 1 | 1 | ||||

| Child Labor | 1 | 1 | 1 | |||

| Climate Risk Mgmt. | 2 | 1 | 1 | 2 | ||

| Clinical Trials | 1 | 1 | ||||

| Collective Bargaining | 2 | 1 | 1 | |||

| Community and Society | 3 | 6 | 10 | 1 | 1 | |

| Corporate Governance | 1 | 1 | ||||

| Corruption | 2 | 1 | 1 | 1 | 1 | |

| Customer Relationship | 1 | 1 | 7 | 1 | 2 | |

| Diversity | 2 | 9 | 1 | 3 | ||

| ESG Incentives | 1 | 1 | ||||

| Electromagnetic Fields | 1 | 1 | ||||

| Employee Development | 1 | 2 | 13 | 1 | 1 | 3 |

| Employee Turnover | 1 | 1 | ||||

| Energy | 3 | 6 | 5 | 1 | 2 | 1 |

| Environmental Fines | 1 | 1 | 1 | |||

| Environmental Mgmt. System | 2 | 1 | 1 | |||

| Environmental Policy | 4 | 2 | 4 | 2 | ||

| Environmental Reporting | 2 | 1 | 1 | |||

| Financial Inclusion | 1 | 1 | 1 | |||

| Forests | 1 | 1 | ||||

| GHG Emissions | 5 | 5 | 1 | 1 | ||

| GHG Policies | 3 | 2 | 4 | |||

| GMOs | 1 | 1 | 1 | |||

| Global Compact Membership | 1 | 1 | ||||

| Green Buildings | 5 | 2 | 1 | 1 | 1 | |

| Green Products | 7 | 1 | 20 | 1 | 2 | 1 |

| HIV Programs | 1 | 1 | ||||

| Hazardous Waste | 1 | 1 | 1 | 1 | ||

| Health and Safety | 7 | 1 | 7 | 1 | 1 | 2 |

| Human Rights | 2 | 1 | 5 | 1 | 5 | |

| Indigenous Rights | 1 | 1 | 1 | |||

| Labor Practices | 3 | 1 | 16 | 4 | 1 | 3 |

| Lobbying | 3 | 1 | 1 | |||

| Non-GHG Air Emissions | 1 | 2 | ||||

| Ozone-Depleting Gases | 1 | 1 | ||||

| Packaging | 1 | 1 | 1 | |||

| Philanthropy | 3 | 1 | 2 | 1 | 1 | |

| Privacy and IT | 1 | 3 | 1 | 2 | ||

| Product Safety | 2 | 2 | 13 | 3 | 2 | 6 |

| Public Health | 1 | 3 | 1 | 2 | ||

| Remuneration | 4 | 1 | 15 | 2 | 1 | 4 |

| Reporting Quality | 3 | 5 | 1 | |||

| Resource Efficiency | 1 | 3 | 6 | |||

| Responsible Marketing | 3 | 3 | 1 | 1 | 1 | |

| Shareholders | 16 | 1 | ||||

| Site Closure | 1 | 1 | ||||

| Supply Chain | 21 | 3 | 4 | 4 | 3 | 6 |

| Sustainable Finance | 9 | 5 | 3 | 3 | 4 | |

| Systemic Risk | 1 | 1 | 1 | |||

| Taxes | 2 | 1 | 1 | |||

| Toxic Spills | 1 | 2 | 1 | |||

| Unions | 1 | 1 | ||||

| Waste | 3 | 2 | 4 | 1 | 3 | |

| Water | 2 | 2 | 3 | 1 | 1 | 2 |

| Unclassified | 7 | 7 | 42 | 1 | 35 | 2 |

| Sum | 163 | 80 | 282 | 38 | 68 | 78 |

| Category . | Sustainalytics . | S&P Global . | Refinitiv . | Moody’s ESG . | MSCI . | KLD . |

|---|---|---|---|---|---|---|

| Access to Basic Services | 2 | 1 | 1 | 1 | ||

| Access to Healthcare | 6 | 3 | 1 | 1 | 1 | |

| Animal Welfare | 2 | 1 | ||||

| Anti-competitive Practices | 2 | 1 | 1 | 1 | ||

| Audit | 4 | 5 | 1 | |||

| Biodiversity | 1 | 1 | 3 | 1 | 1 | 2 |

| Board | 6 | 25 | 1 | 1 | ||

| Board Diversity | 2 | 1 | 3 | |||

| Business Ethics | 4 | 2 | 1 | 1 | 1 | |

| Chairperson-CEO Separation | 1 | 1 | ||||

| Child Labor | 1 | 1 | 1 | |||

| Climate Risk Mgmt. | 2 | 1 | 1 | 2 | ||

| Clinical Trials | 1 | 1 | ||||

| Collective Bargaining | 2 | 1 | 1 | |||

| Community and Society | 3 | 6 | 10 | 1 | 1 | |

| Corporate Governance | 1 | 1 | ||||

| Corruption | 2 | 1 | 1 | 1 | 1 | |

| Customer Relationship | 1 | 1 | 7 | 1 | 2 | |

| Diversity | 2 | 9 | 1 | 3 | ||

| ESG Incentives | 1 | 1 | ||||

| Electromagnetic Fields | 1 | 1 | ||||

| Employee Development | 1 | 2 | 13 | 1 | 1 | 3 |

| Employee Turnover | 1 | 1 | ||||

| Energy | 3 | 6 | 5 | 1 | 2 | 1 |

| Environmental Fines | 1 | 1 | 1 | |||

| Environmental Mgmt. System | 2 | 1 | 1 | |||

| Environmental Policy | 4 | 2 | 4 | 2 | ||

| Environmental Reporting | 2 | 1 | 1 | |||

| Financial Inclusion | 1 | 1 | 1 | |||

| Forests | 1 | 1 | ||||

| GHG Emissions | 5 | 5 | 1 | 1 | ||

| GHG Policies | 3 | 2 | 4 | |||

| GMOs | 1 | 1 | 1 | |||

| Global Compact Membership | 1 | 1 | ||||

| Green Buildings | 5 | 2 | 1 | 1 | 1 | |

| Green Products | 7 | 1 | 20 | 1 | 2 | 1 |

| HIV Programs | 1 | 1 | ||||

| Hazardous Waste | 1 | 1 | 1 | 1 | ||

| Health and Safety | 7 | 1 | 7 | 1 | 1 | 2 |

| Human Rights | 2 | 1 | 5 | 1 | 5 | |

| Indigenous Rights | 1 | 1 | 1 | |||

| Labor Practices | 3 | 1 | 16 | 4 | 1 | 3 |

| Lobbying | 3 | 1 | 1 | |||

| Non-GHG Air Emissions | 1 | 2 | ||||

| Ozone-Depleting Gases | 1 | 1 | ||||

| Packaging | 1 | 1 | 1 | |||

| Philanthropy | 3 | 1 | 2 | 1 | 1 | |

| Privacy and IT | 1 | 3 | 1 | 2 | ||

| Product Safety | 2 | 2 | 13 | 3 | 2 | 6 |

| Public Health | 1 | 3 | 1 | 2 | ||

| Remuneration | 4 | 1 | 15 | 2 | 1 | 4 |

| Reporting Quality | 3 | 5 | 1 | |||

| Resource Efficiency | 1 | 3 | 6 | |||

| Responsible Marketing | 3 | 3 | 1 | 1 | 1 | |

| Shareholders | 16 | 1 | ||||

| Site Closure | 1 | 1 | ||||

| Supply Chain | 21 | 3 | 4 | 4 | 3 | 6 |

| Sustainable Finance | 9 | 5 | 3 | 3 | 4 | |

| Systemic Risk | 1 | 1 | 1 | |||

| Taxes | 2 | 1 | 1 | |||

| Toxic Spills | 1 | 2 | 1 | |||

| Unions | 1 | 1 | ||||

| Waste | 3 | 2 | 4 | 1 | 3 | |

| Water | 2 | 2 | 3 | 1 | 1 | 2 |

| Unclassified | 7 | 7 | 42 | 1 | 35 | 2 |

| Sum | 163 | 80 | 282 | 38 | 68 | 78 |

Number of indicators per rater and category

This table shows how many indicators are provided by the different rating agencies per category. Categories that are covered by all raters are printed in bold.

| Category . | Sustainalytics . | S&P Global . | Refinitiv . | Moody’s ESG . | MSCI . | KLD . |

|---|---|---|---|---|---|---|

| Access to Basic Services | 2 | 1 | 1 | 1 | ||

| Access to Healthcare | 6 | 3 | 1 | 1 | 1 | |

| Animal Welfare | 2 | 1 | ||||

| Anti-competitive Practices | 2 | 1 | 1 | 1 | ||

| Audit | 4 | 5 | 1 | |||

| Biodiversity | 1 | 1 | 3 | 1 | 1 | 2 |

| Board | 6 | 25 | 1 | 1 | ||

| Board Diversity | 2 | 1 | 3 | |||

| Business Ethics | 4 | 2 | 1 | 1 | 1 | |

| Chairperson-CEO Separation | 1 | 1 | ||||

| Child Labor | 1 | 1 | 1 | |||

| Climate Risk Mgmt. | 2 | 1 | 1 | 2 | ||

| Clinical Trials | 1 | 1 | ||||

| Collective Bargaining | 2 | 1 | 1 | |||

| Community and Society | 3 | 6 | 10 | 1 | 1 | |

| Corporate Governance | 1 | 1 | ||||

| Corruption | 2 | 1 | 1 | 1 | 1 | |

| Customer Relationship | 1 | 1 | 7 | 1 | 2 | |

| Diversity | 2 | 9 | 1 | 3 | ||

| ESG Incentives | 1 | 1 | ||||

| Electromagnetic Fields | 1 | 1 | ||||

| Employee Development | 1 | 2 | 13 | 1 | 1 | 3 |

| Employee Turnover | 1 | 1 | ||||

| Energy | 3 | 6 | 5 | 1 | 2 | 1 |

| Environmental Fines | 1 | 1 | 1 | |||

| Environmental Mgmt. System | 2 | 1 | 1 | |||

| Environmental Policy | 4 | 2 | 4 | 2 | ||

| Environmental Reporting | 2 | 1 | 1 | |||

| Financial Inclusion | 1 | 1 | 1 | |||

| Forests | 1 | 1 | ||||

| GHG Emissions | 5 | 5 | 1 | 1 | ||

| GHG Policies | 3 | 2 | 4 | |||

| GMOs | 1 | 1 | 1 | |||

| Global Compact Membership | 1 | 1 | ||||

| Green Buildings | 5 | 2 | 1 | 1 | 1 | |

| Green Products | 7 | 1 | 20 | 1 | 2 | 1 |

| HIV Programs | 1 | 1 | ||||

| Hazardous Waste | 1 | 1 | 1 | 1 | ||

| Health and Safety | 7 | 1 | 7 | 1 | 1 | 2 |

| Human Rights | 2 | 1 | 5 | 1 | 5 | |

| Indigenous Rights | 1 | 1 | 1 | |||

| Labor Practices | 3 | 1 | 16 | 4 | 1 | 3 |

| Lobbying | 3 | 1 | 1 | |||

| Non-GHG Air Emissions | 1 | 2 | ||||

| Ozone-Depleting Gases | 1 | 1 | ||||

| Packaging | 1 | 1 | 1 | |||

| Philanthropy | 3 | 1 | 2 | 1 | 1 | |

| Privacy and IT | 1 | 3 | 1 | 2 | ||

| Product Safety | 2 | 2 | 13 | 3 | 2 | 6 |

| Public Health | 1 | 3 | 1 | 2 | ||

| Remuneration | 4 | 1 | 15 | 2 | 1 | 4 |

| Reporting Quality | 3 | 5 | 1 | |||

| Resource Efficiency | 1 | 3 | 6 | |||

| Responsible Marketing | 3 | 3 | 1 | 1 | 1 | |

| Shareholders | 16 | 1 | ||||

| Site Closure | 1 | 1 | ||||

| Supply Chain | 21 | 3 | 4 | 4 | 3 | 6 |

| Sustainable Finance | 9 | 5 | 3 | 3 | 4 | |

| Systemic Risk | 1 | 1 | 1 | |||

| Taxes | 2 | 1 | 1 | |||

| Toxic Spills | 1 | 2 | 1 | |||

| Unions | 1 | 1 | ||||

| Waste | 3 | 2 | 4 | 1 | 3 | |

| Water | 2 | 2 | 3 | 1 | 1 | 2 |

| Unclassified | 7 | 7 | 42 | 1 | 35 | 2 |

| Sum | 163 | 80 | 282 | 38 | 68 | 78 |

| Category . | Sustainalytics . | S&P Global . | Refinitiv . | Moody’s ESG . | MSCI . | KLD . |

|---|---|---|---|---|---|---|

| Access to Basic Services | 2 | 1 | 1 | 1 | ||

| Access to Healthcare | 6 | 3 | 1 | 1 | 1 | |

| Animal Welfare | 2 | 1 | ||||

| Anti-competitive Practices | 2 | 1 | 1 | 1 | ||

| Audit | 4 | 5 | 1 | |||

| Biodiversity | 1 | 1 | 3 | 1 | 1 | 2 |

| Board | 6 | 25 | 1 | 1 | ||

| Board Diversity | 2 | 1 | 3 | |||

| Business Ethics | 4 | 2 | 1 | 1 | 1 | |

| Chairperson-CEO Separation | 1 | 1 | ||||

| Child Labor | 1 | 1 | 1 | |||

| Climate Risk Mgmt. | 2 | 1 | 1 | 2 | ||

| Clinical Trials | 1 | 1 | ||||

| Collective Bargaining | 2 | 1 | 1 | |||

| Community and Society | 3 | 6 | 10 | 1 | 1 | |

| Corporate Governance | 1 | 1 | ||||

| Corruption | 2 | 1 | 1 | 1 | 1 | |

| Customer Relationship | 1 | 1 | 7 | 1 | 2 | |

| Diversity | 2 | 9 | 1 | 3 | ||

| ESG Incentives | 1 | 1 | ||||

| Electromagnetic Fields | 1 | 1 | ||||

| Employee Development | 1 | 2 | 13 | 1 | 1 | 3 |

| Employee Turnover | 1 | 1 | ||||

| Energy | 3 | 6 | 5 | 1 | 2 | 1 |

| Environmental Fines | 1 | 1 | 1 | |||

| Environmental Mgmt. System | 2 | 1 | 1 | |||

| Environmental Policy | 4 | 2 | 4 | 2 | ||

| Environmental Reporting | 2 | 1 | 1 | |||

| Financial Inclusion | 1 | 1 | 1 | |||

| Forests | 1 | 1 | ||||

| GHG Emissions | 5 | 5 | 1 | 1 | ||

| GHG Policies | 3 | 2 | 4 | |||

| GMOs | 1 | 1 | 1 | |||

| Global Compact Membership | 1 | 1 | ||||

| Green Buildings | 5 | 2 | 1 | 1 | 1 | |

| Green Products | 7 | 1 | 20 | 1 | 2 | 1 |

| HIV Programs | 1 | 1 | ||||

| Hazardous Waste | 1 | 1 | 1 | 1 | ||

| Health and Safety | 7 | 1 | 7 | 1 | 1 | 2 |

| Human Rights | 2 | 1 | 5 | 1 | 5 | |

| Indigenous Rights | 1 | 1 | 1 | |||

| Labor Practices | 3 | 1 | 16 | 4 | 1 | 3 |

| Lobbying | 3 | 1 | 1 | |||

| Non-GHG Air Emissions | 1 | 2 | ||||

| Ozone-Depleting Gases | 1 | 1 | ||||

| Packaging | 1 | 1 | 1 | |||

| Philanthropy | 3 | 1 | 2 | 1 | 1 | |

| Privacy and IT | 1 | 3 | 1 | 2 | ||

| Product Safety | 2 | 2 | 13 | 3 | 2 | 6 |

| Public Health | 1 | 3 | 1 | 2 | ||

| Remuneration | 4 | 1 | 15 | 2 | 1 | 4 |

| Reporting Quality | 3 | 5 | 1 | |||

| Resource Efficiency | 1 | 3 | 6 | |||

| Responsible Marketing | 3 | 3 | 1 | 1 | 1 | |

| Shareholders | 16 | 1 | ||||

| Site Closure | 1 | 1 | ||||

| Supply Chain | 21 | 3 | 4 | 4 | 3 | 6 |

| Sustainable Finance | 9 | 5 | 3 | 3 | 4 | |

| Systemic Risk | 1 | 1 | 1 | |||

| Taxes | 2 | 1 | 1 | |||

| Toxic Spills | 1 | 2 | 1 | |||

| Unions | 1 | 1 | ||||

| Waste | 3 | 2 | 4 | 1 | 3 | |

| Water | 2 | 2 | 3 | 1 | 1 | 2 |

| Unclassified | 7 | 7 | 42 | 1 | 35 | 2 |

| Sum | 163 | 80 | 282 | 38 | 68 | 78 |

Table IV shows how many indicators each rater provides per category. On the one hand, some categories are considered by all six raters, indicating that these are commonly accepted core ESG issues. These are Biodiversity, Employee Development, Energy, Green Products, Health and Safety, Labor Practices, Product Safety, Remuneration, Supply Chain, and Water. On the other hand, many empty cells show that far from all categories are covered by all ratings. Gaps exist both for categories that could be described as specialized, such as Electromagnetic Fields and also for the Taxes category, which could be viewed as a fundamental concern in the context of ESG issues. Also, the considerable number of unclassified indicators shows that many ESG aspects are only measured by one out of six raters. Refinitiv has, with forty-two, the most unclassified indicators, almost all of which stem from Refinitiv’s economic dimension. This dimension contains indicators, such as net income growth or capital expenditure that other rating agencies do not consider. MSCI has thirty-four unclassified indicators; these are what MSCI terms “exposure scores.” Next to scores that evaluate how well a company manages an issue, MSCI has scores that measure how relevant the issue is for the specific company. None of the other raters have indicators that explicitly measure this.

The taxonomy imposes a structure on the data that allows a systematic comparison. Obviously, results may be sensitive to the particular way we built it. To make sure our results are not driven by a particular classification, we created an alternative taxonomy as a robustness check. Instead of constructing the categories from the bottom up, we produce a top-down taxonomy based on SASB. SASB has identified twenty-six general issue categories based on a comprehensive stakeholder consultation process. As such, these categories represent the consensus of a wide range of investors and regulators on the scope of relevant ESG categories. We map all indicators against these twenty-six general issue categories, again requiring that each indicator can only be assigned to one category. This alternative taxonomy, along with results that are based on it, is provided in the Online Appendix. All our results also hold for this alternative taxonomy.

4.2 Measurement

We can study measurement divergence using our taxonomy by comparing the assessments of different raters at the level of categories. We create category scores (C) for each category, firm, and rater. Category scores are calculated by taking the average of the indicator values assigned to the category. Let us define the notations:

Category scores, variables, and indexes:

The following variables and indexes are used throughout the paper: The category score is computed as

| Notation . | Variable . | Index . | Range . |

|---|---|---|---|

| A | Attributes | i | |

| I | Indicators | i | |

| C | Categories | j | |

| Nfkj | Indicators | i |

| Notation . | Variable . | Index . | Range . |

|---|---|---|---|

| A | Attributes | i | |

| I | Indicators | i | |

| C | Categories | j | |

| Nfkj | Indicators | i |

| Notation . | Variable . | Index . | Range . |

|---|---|---|---|

| A | Attributes | i | |

| I | Indicators | i | |

| C | Categories | j | |

| Nfkj | Indicators | i |

| Notation . | Variable . | Index . | Range . |

|---|---|---|---|

| A | Attributes | i | |

| I | Indicators | i | |

| C | Categories | j | |

| Nfkj | Indicators | i |

for firm , rating agency , and category j.

Category scores represent a rating agency’s assessment of a certain ESG category. They are based on different sets of indicators that each rely on different measurement protocols. It follows that differences between category scores stem from differences in “how” rating agencies choose to measure, rather than what they choose to measure. Thus, differences between the same categories from different raters can be interpreted as measurement divergence. Some rating agencies employ different sets of indicators for different industries. Such industry-specific considerations about measurement are also reflected in the category scores because those scores take the average of all available indicator values.

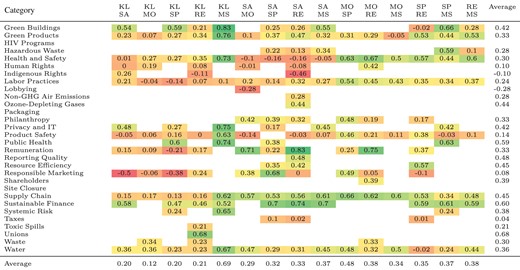

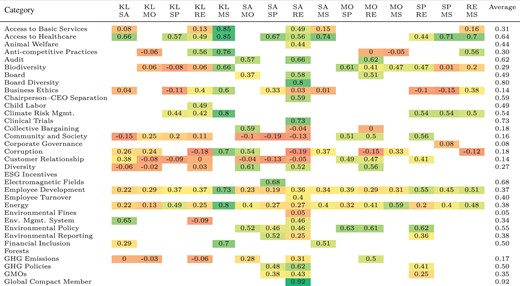

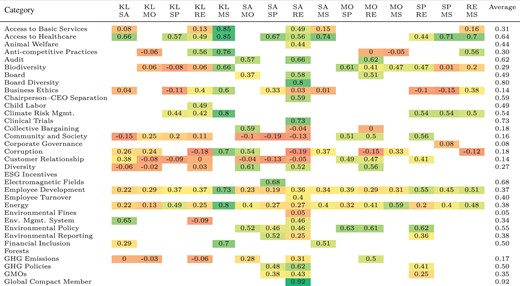

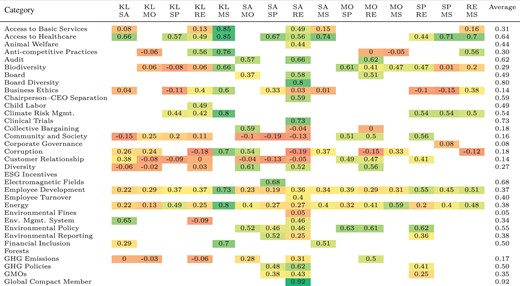

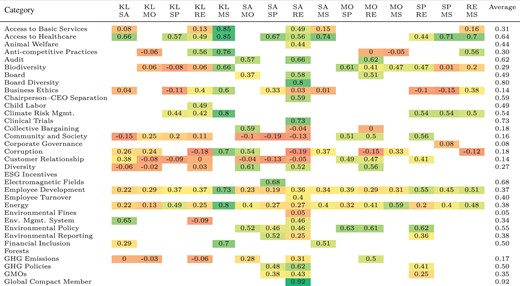

Table V shows the correlations between the categories. The correlations are calculated on the basis of complete pairwise observations per category and rater pair. The table offers two insights. First, correlation levels are heterogeneous. Environmental policy, for instance, has an average correlation level of 0.55. This indicates at least some level of agreement regarding the existence and quality of the firms’ environmental policy. However, even categories that measure straightforward facts that are easily obtained from public records do not all have high levels of correlation. For instance, membership of the UN Global Compact and CEO/Chairperson separation should be unambiguous but show correlations of 0.92 and 0.59, respectively. There are also several negative correlations, such as Lobbying between Sustainalytics and Moody’s ESG or Indigenous Rights between Sustainalytics and Refinitiv. In these cases, the level of disagreement is so severe that rating agencies reach not merely different but opposite conclusions.

Correlation of category scores

Correlations of category scores for pairs of rating agencies. Category scores are an average of the indicators for firm f and rater k assigned to the same category. Empty cells reflect differences in scope or missing data. SA, SP, MO, RE, KL, and MS are short for Sustainalytics, S&P Global, Moody’s ESG, Refinitiv, KLD, and MSCI, respectively.

|

|

Correlation of category scores

Correlations of category scores for pairs of rating agencies. Category scores are an average of the indicators for firm f and rater k assigned to the same category. Empty cells reflect differences in scope or missing data. SA, SP, MO, RE, KL, and MS are short for Sustainalytics, S&P Global, Moody’s ESG, Refinitiv, KLD, and MSCI, respectively.

|

|

The second insight is that correlations tend to increase with granularity. For example, the correlations of the Water and Energy categories are on average 0.36 and 0.38, respectively. This is substantially lower than the correlation of the environmental dimension, with an average of 0.53 reported in Table II. This implies that divergences compensate for each other to some extent during aggregation. Several potential reasons can explain this observation. One reason might be that category scores behave like noisy measures of an underlying latent quality so that the measurement disagreement on individual categories cancels out during aggregation. It may also be the case that rating agencies assess a firm relatively strictly in one category and relatively leniently in another. A concern might be that the low correlations at the category level result from misclassification in our taxonomy, in the sense that highly correlated indicators were sorted into different categories. While we cannot rule this out completely, the alternative taxonomy based on SASB criteria mitigates this concern. It is a much less granular classification, which, therefore, should decrease the influence of any misclassification. However, the average correlation per rater pair changes only a little and not systematically when using this alternative taxonomy. This provides reassurance that the observed correlation levels are not an artifact of misclassification in our taxonomy. The correlations with the taxonomy based on SASB criteria can be seen in Table A.3 of the Online Appendix.

4.3 Weight

We can proceed with an analysis of weight divergence based on the category scores. To do so, we estimate the aggregation rule that transforms the category scores Cfkj into the rating Rfk for each rater k. Category scores, as defined in Section 4.2, serve as independent variables. When no indicator values are available to compute the category score for a given firm, the score is set to zero. This is necessary to run regressions without dropping all categories with missing values, which are numerous. Of course, this entails an assumption that missing data indicate poor performance. Categories for which no values are available for any firm in the common sample are dropped. After this treatment, category scores are normalized to zero mean and unit variance, corresponding to the normalized ratings. Each unclassified indicator is treated as a separate rater-specific category.

Because all the data have been normalized, we exclude the constant term. Due to the non-negativity constraint, we calculate the standard errors by bootstrap. We focus on the R2 as a measure of the quality of fit.

The results are shown in Table VI. MSCI has the lowest R2, with 0.79. Sustainalytics is the second lowest, with 0.90. The regressions for KLD, Moody’s ESG, Refinitiv, and S&P Global have R2 values of 0.99, 0.96, 0.92, and 0.98, respectively. These high R2 values indicate that a linear model based on our taxonomy is able to replicate the original ratings quite accurately.

Non-negative least squares regression

Non-negative linear regressions of the ESG rating on the categories of the same rater. The constant term is excluded because the data have been normalized. The standard errors are bootstrapped. Non-existent categories are denoted by dashes. The three most important categories per rater, indicated by coefficient size, are printed in bold.

| Category . | Sustainalytics . | S&P Global . | Refinitiv . | Moody’s ESG . | MSCI . | KLD . |

|---|---|---|---|---|---|---|

| Access to Basic Services | 0.019 | – | 0 | – | 0.138*** | 0.065*** |

| Access to Healthcare | 0.051*** | 0.004 | 0 | – | 0.079*** | 0.051*** |

| Animal Welfare | 0.05*** | – | 0 | – | – | – |

| Anti-competitive Practices | – | – | 0.05*** | 0.023*** | 0 | 0.131*** |

| Audit | 0 | – | 0.026* | 0.084*** | – | – |

| Biodiversity | 0 | 0 | 0 | 0.028*** | 0.366*** | 0.076*** |

| Board | 0.072*** | – | 0.196*** | 0.113*** | 0 | – |

| Board Diversity | 0.043*** | – | 0 | – | – | 0 |

| Business Ethics | 0.097*** | 0.046*** | 0.008 | – | 0 | 0.148*** |

| Chairperson-CEO Separation | 0.039*** | – | 0.016 | – | – | – |

| Child Labor | – | – | 0.008 | 0 | – | 0.046*** |

| Climate Risk Mgmt. | – | 0.137*** | 0.064*** | – | 0.069** | 0.234*** |

| Clinical Trials | 0 | – | 0 | – | – | – |

| Collective Bargaining | 0.051*** | – | 0.011* | 0.072*** | – | – |

| Community and Society | 0.079*** | 0.086*** | 0.03* | 0.001 | – | 0.14*** |

| Corporate Governance | – | 0.048*** | – | – | 0.198*** | – |

| Corruption | 0.049*** | – | 0.022* | 0.072*** | 0.388*** | 0.124*** |

| Customer Relationship | 0.127*** | 0.097*** | 0.086*** | 0.027*** | – | 0.104*** |

| Diversity | 0.108*** | – | 0.066*** | 0.159*** | – | 0.04*** |

| ESG Incentives | 0.006 | 0 | – | – | – | – |

| Electromagnetic Fields | 0.021** | 0 | – | – | – | – |

| Employee Development | 0.018* | 0.221*** | 0.116*** | 0.067*** | 0.406*** | 0.149*** |

| Employee Turnover | 0.024* | – | 0 | – | – | – |

| Energy | 0.032** | 0.016*** | 0.029** | 0.103*** | 0.194*** | 0.046*** |

| Environmental Fines | 0 | – | 0 | – | – | 0 |

| Environmental Mgmt. System | 0.199*** | – | 0.009 | – | – | 0.205*** |

| Environmental Policy | 0.091*** | 0.098*** | 0.012 | 0.187*** | – | – |

| Environmental Reporting | 0.043** | 0.039*** | 0.007 | – | – | – |

| Financial Inclusion | 0 | – | – | – | 0.089*** | 0.061*** |

| Forests | 0.008 | 0.016* | – | – | – | – |

| GHG Emissions | 0.048*** | – | 0.002 | 0.033*** | – | 0.021** |

| GHG Policies | 0.086*** | 0.008** | 0.047** | – | – | – |

| GMOs | 0 | 0 | 0 | – | – | – |

| Global Compact Membership | 0.029** | – | 0 | – | – | – |

| Green Buildings | 0.072*** | 0.071*** | 0 | – | 0.304*** | 0.072*** |

| Green Products | 0.167*** | 0.037*** | 0.093*** | 0.024** | 0.351*** | 0.129*** |

| HIV Programs | 0 | – | 0.003 | – | – | – |

| Hazardous Waste | 0.021* | 0 | 0 | – | 0.09*** | – |

| Health and Safety | 0.049*** | 0.042*** | 0.049*** | 0.125*** | 0.148*** | 0.174*** |

| Human Rights | 0.072*** | 0 | 0.066*** | 0 | – | 0.14*** |

| Indigenous Rights | 0.033* | – | 0.006 | – | – | 0.087*** |

| Labor Practices | 0.005 | 0.063*** | 0.067*** | 0.153*** | 0.166*** | 0.129*** |

| Lobbying | 0.091*** | 0 | – | 0.013 | – | – |

| Non-GHG Air Emissions | 0.014 | – | 0 | – | – | – |

| Ozone-depleting Gases | 0 | – | 0 | – | – | – |

| Packaging | – | 0 | – | – | 0.128** | 0.033*** |

| Philanthropy | 0.028* | 0.075*** | 0.039*** | 0.073*** | – | 0 |

| Privacy and IT | 0.022* | 0.039*** | – | – | 0.276*** | 0.124*** |

| Product Safety | 0.048*** | 0.002 | 0.059*** | 0.062*** | 0.429*** | 0.216*** |

| Public Health | 0.022** | 0.011* | – | – | 0.029 | 0.074*** |

| Remuneration | 0 | 0.054*** | 0.117*** | 0.113*** | 0 | 0.223*** |

| Reporting Quality | 0.123*** | – | 0.107*** | – | – | 0 |

| Resource Efficiency | 0.014 | 0.114*** | 0.135*** | – | – | – |

| Responsible Marketing | 0 | 0.033*** | 0 | 0.002 | – | 0.081*** |

| Shareholders | – | – | 0.111*** | 0.089*** | – | – |

| Site Closure | 0.008 | 0 | – | – | – | – |

| Supply Chain | 0.253*** | 0.061*** | 0.042** | 0.05*** | 0.188*** | 0.128*** |

| Sustainable Finance | 0.108*** | 0.079*** | 0.063*** | – | 0.275*** | 0.098*** |

| Systemic Risk | – | 0.053*** | – | – | 0.349*** | 0.103*** |

| Taxes | 0.052*** | 0.01 | 0.03** | – | – | – |

| Toxic Spills | 0 | – | 0.001 | – | – | 0.113*** |

| Unions | – | – | 0.013 | – | – | 0.158*** |

| Waste | 0 | 0.005 | 0.035*** | 0.009 | – | 0.186*** |

| Water | 0.03** | 0.016*** | 0.028** | 0 | 0.035 | 0.175*** |

| Unclassified Indicators | Yes | Yes | Yes | Yes | Yes | Yes |

| R2 | 0.90 | 0.98 | 0.92 | 0.96 | 0.79 | 0.99 |

| Firms | 924 | 924 | 924 | 924 | 924 | 924 |

| Category . | Sustainalytics . | S&P Global . | Refinitiv . | Moody’s ESG . | MSCI . | KLD . |

|---|---|---|---|---|---|---|

| Access to Basic Services | 0.019 | – | 0 | – | 0.138*** | 0.065*** |

| Access to Healthcare | 0.051*** | 0.004 | 0 | – | 0.079*** | 0.051*** |

| Animal Welfare | 0.05*** | – | 0 | – | – | – |

| Anti-competitive Practices | – | – | 0.05*** | 0.023*** | 0 | 0.131*** |

| Audit | 0 | – | 0.026* | 0.084*** | – | – |

| Biodiversity | 0 | 0 | 0 | 0.028*** | 0.366*** | 0.076*** |

| Board | 0.072*** | – | 0.196*** | 0.113*** | 0 | – |

| Board Diversity | 0.043*** | – | 0 | – | – | 0 |

| Business Ethics | 0.097*** | 0.046*** | 0.008 | – | 0 | 0.148*** |

| Chairperson-CEO Separation | 0.039*** | – | 0.016 | – | – | – |

| Child Labor | – | – | 0.008 | 0 | – | 0.046*** |

| Climate Risk Mgmt. | – | 0.137*** | 0.064*** | – | 0.069** | 0.234*** |

| Clinical Trials | 0 | – | 0 | – | – | – |

| Collective Bargaining | 0.051*** | – | 0.011* | 0.072*** | – | – |

| Community and Society | 0.079*** | 0.086*** | 0.03* | 0.001 | – | 0.14*** |

| Corporate Governance | – | 0.048*** | – | – | 0.198*** | – |

| Corruption | 0.049*** | – | 0.022* | 0.072*** | 0.388*** | 0.124*** |

| Customer Relationship | 0.127*** | 0.097*** | 0.086*** | 0.027*** | – | 0.104*** |

| Diversity | 0.108*** | – | 0.066*** | 0.159*** | – | 0.04*** |

| ESG Incentives | 0.006 | 0 | – | – | – | – |

| Electromagnetic Fields | 0.021** | 0 | – | – | – | – |

| Employee Development | 0.018* | 0.221*** | 0.116*** | 0.067*** | 0.406*** | 0.149*** |

| Employee Turnover | 0.024* | – | 0 | – | – | – |

| Energy | 0.032** | 0.016*** | 0.029** | 0.103*** | 0.194*** | 0.046*** |

| Environmental Fines | 0 | – | 0 | – | – | 0 |

| Environmental Mgmt. System | 0.199*** | – | 0.009 | – | – | 0.205*** |

| Environmental Policy | 0.091*** | 0.098*** | 0.012 | 0.187*** | – | – |

| Environmental Reporting | 0.043** | 0.039*** | 0.007 | – | – | – |

| Financial Inclusion | 0 | – | – | – | 0.089*** | 0.061*** |

| Forests | 0.008 | 0.016* | – | – | – | – |

| GHG Emissions | 0.048*** | – | 0.002 | 0.033*** | – | 0.021** |

| GHG Policies | 0.086*** | 0.008** | 0.047** | – | – | – |

| GMOs | 0 | 0 | 0 | – | – | – |

| Global Compact Membership | 0.029** | – | 0 | – | – | – |

| Green Buildings | 0.072*** | 0.071*** | 0 | – | 0.304*** | 0.072*** |

| Green Products | 0.167*** | 0.037*** | 0.093*** | 0.024** | 0.351*** | 0.129*** |

| HIV Programs | 0 | – | 0.003 | – | – | – |

| Hazardous Waste | 0.021* | 0 | 0 | – | 0.09*** | – |

| Health and Safety | 0.049*** | 0.042*** | 0.049*** | 0.125*** | 0.148*** | 0.174*** |

| Human Rights | 0.072*** | 0 | 0.066*** | 0 | – | 0.14*** |

| Indigenous Rights | 0.033* | – | 0.006 | – | – | 0.087*** |

| Labor Practices | 0.005 | 0.063*** | 0.067*** | 0.153*** | 0.166*** | 0.129*** |

| Lobbying | 0.091*** | 0 | – | 0.013 | – | – |

| Non-GHG Air Emissions | 0.014 | – | 0 | – | – | – |

| Ozone-depleting Gases | 0 | – | 0 | – | – | – |

| Packaging | – | 0 | – | – | 0.128** | 0.033*** |

| Philanthropy | 0.028* | 0.075*** | 0.039*** | 0.073*** | – | 0 |

| Privacy and IT | 0.022* | 0.039*** | – | – | 0.276*** | 0.124*** |

| Product Safety | 0.048*** | 0.002 | 0.059*** | 0.062*** | 0.429*** | 0.216*** |

| Public Health | 0.022** | 0.011* | – | – | 0.029 | 0.074*** |

| Remuneration | 0 | 0.054*** | 0.117*** | 0.113*** | 0 | 0.223*** |

| Reporting Quality | 0.123*** | – | 0.107*** | – | – | 0 |

| Resource Efficiency | 0.014 | 0.114*** | 0.135*** | – | – | – |

| Responsible Marketing | 0 | 0.033*** | 0 | 0.002 | – | 0.081*** |

| Shareholders | – | – | 0.111*** | 0.089*** | – | – |

| Site Closure | 0.008 | 0 | – | – | – | – |

| Supply Chain | 0.253*** | 0.061*** | 0.042** | 0.05*** | 0.188*** | 0.128*** |

| Sustainable Finance | 0.108*** | 0.079*** | 0.063*** | – | 0.275*** | 0.098*** |

| Systemic Risk | – | 0.053*** | – | – | 0.349*** | 0.103*** |

| Taxes | 0.052*** | 0.01 | 0.03** | – | – | – |

| Toxic Spills | 0 | – | 0.001 | – | – | 0.113*** |

| Unions | – | – | 0.013 | – | – | 0.158*** |

| Waste | 0 | 0.005 | 0.035*** | 0.009 | – | 0.186*** |

| Water | 0.03** | 0.016*** | 0.028** | 0 | 0.035 | 0.175*** |

| Unclassified Indicators | Yes | Yes | Yes | Yes | Yes | Yes |

| R2 | 0.90 | 0.98 | 0.92 | 0.96 | 0.79 | 0.99 |

| Firms | 924 | 924 | 924 | 924 | 924 | 924 |

Significance levels: *P < 0.05, **P < 0.01, ***P < 0.001.

Non-negative least squares regression

Non-negative linear regressions of the ESG rating on the categories of the same rater. The constant term is excluded because the data have been normalized. The standard errors are bootstrapped. Non-existent categories are denoted by dashes. The three most important categories per rater, indicated by coefficient size, are printed in bold.

| Category . | Sustainalytics . | S&P Global . | Refinitiv . | Moody’s ESG . | MSCI . | KLD . |

|---|---|---|---|---|---|---|

| Access to Basic Services | 0.019 | – | 0 | – | 0.138*** | 0.065*** |

| Access to Healthcare | 0.051*** | 0.004 | 0 | – | 0.079*** | 0.051*** |

| Animal Welfare | 0.05*** | – | 0 | – | – | – |

| Anti-competitive Practices | – | – | 0.05*** | 0.023*** | 0 | 0.131*** |

| Audit | 0 | – | 0.026* | 0.084*** | – | – |

| Biodiversity | 0 | 0 | 0 | 0.028*** | 0.366*** | 0.076*** |

| Board | 0.072*** | – | 0.196*** | 0.113*** | 0 | – |

| Board Diversity | 0.043*** | – | 0 | – | – | 0 |

| Business Ethics | 0.097*** | 0.046*** | 0.008 | – | 0 | 0.148*** |

| Chairperson-CEO Separation | 0.039*** | – | 0.016 | – | – | – |

| Child Labor | – | – | 0.008 | 0 | – | 0.046*** |

| Climate Risk Mgmt. | – | 0.137*** | 0.064*** | – | 0.069** | 0.234*** |

| Clinical Trials | 0 | – | 0 | – | – | – |

| Collective Bargaining | 0.051*** | – | 0.011* | 0.072*** | – | – |

| Community and Society | 0.079*** | 0.086*** | 0.03* | 0.001 | – | 0.14*** |

| Corporate Governance | – | 0.048*** | – | – | 0.198*** | – |

| Corruption | 0.049*** | – | 0.022* | 0.072*** | 0.388*** | 0.124*** |

| Customer Relationship | 0.127*** | 0.097*** | 0.086*** | 0.027*** | – | 0.104*** |

| Diversity | 0.108*** | – | 0.066*** | 0.159*** | – | 0.04*** |

| ESG Incentives | 0.006 | 0 | – | – | – | – |

| Electromagnetic Fields | 0.021** | 0 | – | – | – | – |

| Employee Development | 0.018* | 0.221*** | 0.116*** | 0.067*** | 0.406*** | 0.149*** |

| Employee Turnover | 0.024* | – | 0 | – | – | – |

| Energy | 0.032** | 0.016*** | 0.029** | 0.103*** | 0.194*** | 0.046*** |

| Environmental Fines | 0 | – | 0 | – | – | 0 |

| Environmental Mgmt. System | 0.199*** | – | 0.009 | – | – | 0.205*** |

| Environmental Policy | 0.091*** | 0.098*** | 0.012 | 0.187*** | – | – |

| Environmental Reporting | 0.043** | 0.039*** | 0.007 | – | – | – |

| Financial Inclusion | 0 | – | – | – | 0.089*** | 0.061*** |

| Forests | 0.008 | 0.016* | – | – | – | – |

| GHG Emissions | 0.048*** | – | 0.002 | 0.033*** | – | 0.021** |

| GHG Policies | 0.086*** | 0.008** | 0.047** | – | – | – |

| GMOs | 0 | 0 | 0 | – | – | – |

| Global Compact Membership | 0.029** | – | 0 | – | – | – |

| Green Buildings | 0.072*** | 0.071*** | 0 | – | 0.304*** | 0.072*** |

| Green Products | 0.167*** | 0.037*** | 0.093*** | 0.024** | 0.351*** | 0.129*** |

| HIV Programs | 0 | – | 0.003 | – | – | – |

| Hazardous Waste | 0.021* | 0 | 0 | – | 0.09*** | – |

| Health and Safety | 0.049*** | 0.042*** | 0.049*** | 0.125*** | 0.148*** | 0.174*** |

| Human Rights | 0.072*** | 0 | 0.066*** | 0 | – | 0.14*** |

| Indigenous Rights | 0.033* | – | 0.006 | – | – | 0.087*** |

| Labor Practices | 0.005 | 0.063*** | 0.067*** | 0.153*** | 0.166*** | 0.129*** |

| Lobbying | 0.091*** | 0 | – | 0.013 | – | – |

| Non-GHG Air Emissions | 0.014 | – | 0 | – | – | – |

| Ozone-depleting Gases | 0 | – | 0 | – | – | – |

| Packaging | – | 0 | – | – | 0.128** | 0.033*** |

| Philanthropy | 0.028* | 0.075*** | 0.039*** | 0.073*** | – | 0 |

| Privacy and IT | 0.022* | 0.039*** | – | – | 0.276*** | 0.124*** |

| Product Safety | 0.048*** | 0.002 | 0.059*** | 0.062*** | 0.429*** | 0.216*** |

| Public Health | 0.022** | 0.011* | – | – | 0.029 | 0.074*** |

| Remuneration | 0 | 0.054*** | 0.117*** | 0.113*** | 0 | 0.223*** |

| Reporting Quality | 0.123*** | – | 0.107*** | – | – | 0 |

| Resource Efficiency | 0.014 | 0.114*** | 0.135*** | – | – | – |

| Responsible Marketing | 0 | 0.033*** | 0 | 0.002 | – | 0.081*** |

| Shareholders | – | – | 0.111*** | 0.089*** | – | – |

| Site Closure | 0.008 | 0 | – | – | – | – |

| Supply Chain | 0.253*** | 0.061*** | 0.042** | 0.05*** | 0.188*** | 0.128*** |

| Sustainable Finance | 0.108*** | 0.079*** | 0.063*** | – | 0.275*** | 0.098*** |

| Systemic Risk | – | 0.053*** | – | – | 0.349*** | 0.103*** |

| Taxes | 0.052*** | 0.01 | 0.03** | – | – | – |

| Toxic Spills | 0 | – | 0.001 | – | – | 0.113*** |

| Unions | – | – | 0.013 | – | – | 0.158*** |

| Waste | 0 | 0.005 | 0.035*** | 0.009 | – | 0.186*** |

| Water | 0.03** | 0.016*** | 0.028** | 0 | 0.035 | 0.175*** |

| Unclassified Indicators | Yes | Yes | Yes | Yes | Yes | Yes |

| R2 | 0.90 | 0.98 | 0.92 | 0.96 | 0.79 | 0.99 |

| Firms | 924 | 924 | 924 | 924 | 924 | 924 |

| Category . | Sustainalytics . | S&P Global . | Refinitiv . | Moody’s ESG . | MSCI . | KLD . |

|---|---|---|---|---|---|---|

| Access to Basic Services | 0.019 | – | 0 | – | 0.138*** | 0.065*** |

| Access to Healthcare | 0.051*** | 0.004 | 0 | – | 0.079*** | 0.051*** |

| Animal Welfare | 0.05*** | – | 0 | – | – | – |

| Anti-competitive Practices | – | – | 0.05*** | 0.023*** | 0 | 0.131*** |

| Audit | 0 | – | 0.026* | 0.084*** | – | – |

| Biodiversity | 0 | 0 | 0 | 0.028*** | 0.366*** | 0.076*** |

| Board | 0.072*** | – | 0.196*** | 0.113*** | 0 | – |

| Board Diversity | 0.043*** | – | 0 | – | – | 0 |

| Business Ethics | 0.097*** | 0.046*** | 0.008 | – | 0 | 0.148*** |

| Chairperson-CEO Separation | 0.039*** | – | 0.016 | – | – | – |

| Child Labor | – | – | 0.008 | 0 | – | 0.046*** |

| Climate Risk Mgmt. | – | 0.137*** | 0.064*** | – | 0.069** | 0.234*** |

| Clinical Trials | 0 | – | 0 | – | – | – |

| Collective Bargaining | 0.051*** | – | 0.011* | 0.072*** | – | – |

| Community and Society | 0.079*** | 0.086*** | 0.03* | 0.001 | – | 0.14*** |

| Corporate Governance | – | 0.048*** | – | – | 0.198*** | – |

| Corruption | 0.049*** | – | 0.022* | 0.072*** | 0.388*** | 0.124*** |

| Customer Relationship | 0.127*** | 0.097*** | 0.086*** | 0.027*** | – | 0.104*** |

| Diversity | 0.108*** | – | 0.066*** | 0.159*** | – | 0.04*** |

| ESG Incentives | 0.006 | 0 | – | – | – | – |

| Electromagnetic Fields | 0.021** | 0 | – | – | – | – |

| Employee Development | 0.018* | 0.221*** | 0.116*** | 0.067*** | 0.406*** | 0.149*** |

| Employee Turnover | 0.024* | – | 0 | – | – | – |

| Energy | 0.032** | 0.016*** | 0.029** | 0.103*** | 0.194*** | 0.046*** |

| Environmental Fines | 0 | – | 0 | – | – | 0 |

| Environmental Mgmt. System | 0.199*** | – | 0.009 | – | – | 0.205*** |

| Environmental Policy | 0.091*** | 0.098*** | 0.012 | 0.187*** | – | – |

| Environmental Reporting | 0.043** | 0.039*** | 0.007 | – | – | – |

| Financial Inclusion | 0 | – | – | – | 0.089*** | 0.061*** |

| Forests | 0.008 | 0.016* | – | – | – | – |

| GHG Emissions | 0.048*** | – | 0.002 | 0.033*** | – | 0.021** |

| GHG Policies | 0.086*** | 0.008** | 0.047** | – | – | – |

| GMOs | 0 | 0 | 0 | – | – | – |

| Global Compact Membership | 0.029** | – | 0 | – | – | – |

| Green Buildings | 0.072*** | 0.071*** | 0 | – | 0.304*** | 0.072*** |

| Green Products | 0.167*** | 0.037*** | 0.093*** | 0.024** | 0.351*** | 0.129*** |

| HIV Programs | 0 | – | 0.003 | – | – | – |

| Hazardous Waste | 0.021* | 0 | 0 | – | 0.09*** | – |

| Health and Safety | 0.049*** | 0.042*** | 0.049*** | 0.125*** | 0.148*** | 0.174*** |

| Human Rights | 0.072*** | 0 | 0.066*** | 0 | – | 0.14*** |

| Indigenous Rights | 0.033* | – | 0.006 | – | – | 0.087*** |

| Labor Practices | 0.005 | 0.063*** | 0.067*** | 0.153*** | 0.166*** | 0.129*** |

| Lobbying | 0.091*** | 0 | – | 0.013 | – | – |

| Non-GHG Air Emissions | 0.014 | – | 0 | – | – | – |

| Ozone-depleting Gases | 0 | – | 0 | – | – | – |

| Packaging | – | 0 | – | – | 0.128** | 0.033*** |

| Philanthropy | 0.028* | 0.075*** | 0.039*** | 0.073*** | – | 0 |

| Privacy and IT | 0.022* | 0.039*** | – | – | 0.276*** | 0.124*** |

| Product Safety | 0.048*** | 0.002 | 0.059*** | 0.062*** | 0.429*** | 0.216*** |

| Public Health | 0.022** | 0.011* | – | – | 0.029 | 0.074*** |

| Remuneration | 0 | 0.054*** | 0.117*** | 0.113*** | 0 | 0.223*** |

| Reporting Quality | 0.123*** | – | 0.107*** | – | – | 0 |

| Resource Efficiency | 0.014 | 0.114*** | 0.135*** | – | – | – |

| Responsible Marketing | 0 | 0.033*** | 0 | 0.002 | – | 0.081*** |

| Shareholders | – | – | 0.111*** | 0.089*** | – | – |

| Site Closure | 0.008 | 0 | – | – | – | – |

| Supply Chain | 0.253*** | 0.061*** | 0.042** | 0.05*** | 0.188*** | 0.128*** |

| Sustainable Finance | 0.108*** | 0.079*** | 0.063*** | – | 0.275*** | 0.098*** |

| Systemic Risk | – | 0.053*** | – | – | 0.349*** | 0.103*** |

| Taxes | 0.052*** | 0.01 | 0.03** | – | – | – |

| Toxic Spills | 0 | – | 0.001 | – | – | 0.113*** |

| Unions | – | – | 0.013 | – | – | 0.158*** |

| Waste | 0 | 0.005 | 0.035*** | 0.009 | – | 0.186*** |

| Water | 0.03** | 0.016*** | 0.028** | 0 | 0.035 | 0.175*** |

| Unclassified Indicators | Yes | Yes | Yes | Yes | Yes | Yes |

| R2 | 0.90 | 0.98 | 0.92 | 0.96 | 0.79 | 0.99 |

| Firms | 924 | 924 | 924 | 924 | 924 | 924 |

Significance levels: *P < 0.05, **P < 0.01, ***P < 0.001.

The regression coefficients can be interpreted as category weights. Because all variables have been normalized, the magnitude of the coefficients is comparable and indicates the relative importance of a category. Most coefficients are highly significant. Some coefficients are not significant at the 5% threshold, which means that our estimated weight is uncertain. However, those coefficients are much smaller in magnitude than the significant coefficients; most of them are close to zero and thus do not seem to have an important influence on the aggregate ESG rating.

There are substantial differences in the weights for different raters. For example, the three most important categories for KLD are Climate Risk Management, Product Safety, and Remuneration. For Moody’s ESG, the top three are Diversity, Environmental Policy, and Labor Practices. This means there is no overlap in the three most important categories for these two raters. Only Resource Efficiency and Climate Risk Management are among the three most important categories for more than one rater. At the same time, some categories have zero weight for all raters, such as Clinical Trials and Environmental Fines, genetically modified organisms (GMOs), and Ozone-depleting Gases. These observations highlight that different raters have substantially different views about the most important categories. In other words, there is weight divergence between raters.

The estimation of the aggregation function entails several assumptions. To ensure the robustness of our results, we evaluate several alternative specifications. The results are summarized in Table VII. None offers substantial improvements in the quality of fit over the non-negative linear regression.

Quality of fit

Comparison of the quality of fit in terms of R2 for the estimation of rater-specific aggregation functions using different specifications. NNLS stands for non-negative least squares and OLS for ordinary least squares. NN stands for neural network with linear activation function and NN ReLU for a neural network with a non-linear ReLU activation function. RF stands for random forest. The symbol * indicates that the R2 is reported for a testing set consisting of a randomly chosen 10% of the sample. The three last lines report results from the original method with different underlying data. For NNLS SASB, the category scores were calculated based on the SASB taxonomy; for NNLS indicators, the original indicators were used without any taxonomy, and for NNLS 2017, the underlying data are from 2017 instead of from 2014. Given that KLD does not offer any data for 2017, no value is reported.

| Specification . | KLD . | Moody’s ESG . | S&P Global . | Sustainalytics . | MSCI . | Refinitiv . |

|---|---|---|---|---|---|---|

| NNLS | 0.99 | 0.96 | 0.98 | 0.90 | 0.79 | 0.92 |

| OLS | 0.99 | 0.96 | 0.98 | 0.91 | 0.79 | 0.92 |

| NNLS* | 0.98 | 0.94 | 0.98 | 0.89 | 0.74 | 0.83 |

| NN* | 0.98 | 0.94 | 0.98 | 0.88 | 0.83 | 0.83 |

| NN ReLU* | 0.96 | 0.96 | 0.98 | 0.83 | 0.85 | 0.80 |

| RF* | 0.73 | 0.91 | 0.97 | 0.85 | 0.56 | 0.86 |

| NNLS SASB | 0.98 | 0.96 | 0.98 | 0.87 | 0.76 | 0.92 |

| NNLS Indicators | 1 | 0.96 | 0.99 | 0.90 | 0.82 | 0.94 |

| NNLS 2017 | 0.96 | 0.98 | 0.91 | 0.68 | 0.82 |

| Specification . | KLD . | Moody’s ESG . | S&P Global . | Sustainalytics . | MSCI . | Refinitiv . |

|---|---|---|---|---|---|---|

| NNLS | 0.99 | 0.96 | 0.98 | 0.90 | 0.79 | 0.92 |

| OLS | 0.99 | 0.96 | 0.98 | 0.91 | 0.79 | 0.92 |

| NNLS* | 0.98 | 0.94 | 0.98 | 0.89 | 0.74 | 0.83 |

| NN* | 0.98 | 0.94 | 0.98 | 0.88 | 0.83 | 0.83 |

| NN ReLU* | 0.96 | 0.96 | 0.98 | 0.83 | 0.85 | 0.80 |

| RF* | 0.73 | 0.91 | 0.97 | 0.85 | 0.56 | 0.86 |

| NNLS SASB | 0.98 | 0.96 | 0.98 | 0.87 | 0.76 | 0.92 |

| NNLS Indicators | 1 | 0.96 | 0.99 | 0.90 | 0.82 | 0.94 |

| NNLS 2017 | 0.96 | 0.98 | 0.91 | 0.68 | 0.82 |

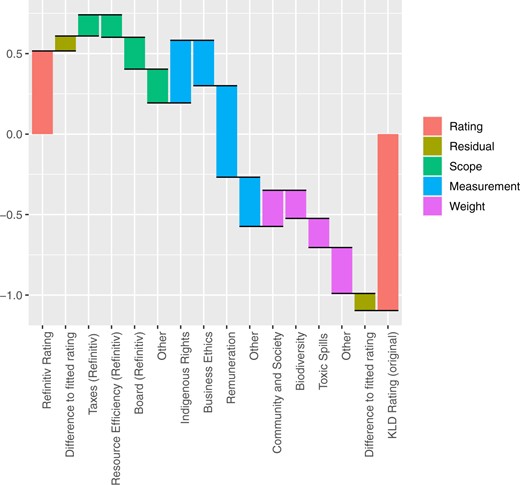

Quality of fit